In-person gatherings of HPA and NAB 2023 confirm the industry’s technology footprint has touched back down with hefty numbers and super-engaging IP.

by David Geffner / Photos Courtesy of HPA and NAB

The tip-off was easy. Arriving an hour into the HPA (Hollywood Professional Association) Tech Retreat this past February at the Westin Mission Hills in Rancho Mirage, CA, the main ballroom was standing-room only. Seated attendees for the Tuesday Super Session were jammed side-by-side, just like those pre-COVID years when ideas (and snacks) moved as freely as the wind in the Coachella Valley.

Things weren’t exactly the same, of course. The entire day was centered around the unusual production workflow for Avatar: The Way of Water, which somehow barely missed a beat during the darkest days of the pandemic. As I walked in, fatigued from the usual traffic slog coming down from L.A., I saw Local 600 Director of Photography Russel Carpenter, ASC, talking to attendees on the Westin’s big screen – quarantine had kept the cinematographer from joining the rest of the in-person panelists.

No matter. As HPA’s Tech Retreat has consistently shown during its surprisingly long-lived tenure (2025 will mark the group’s 30th retreat), where there is new technology and people who thrive on getting the most from complex and ever-evolving tools, there will always be an HPA session to enlighten, entertain and unify.

So, it was with HPA 2023 – one of the most engaging and thought-provoking retreats in recent memory. Carpenter’s session (the first of the morning) was moderated by HPA Board Member and HPA Engineering Awards Committee Chair Joachim Zell. Joining the Oscar-winning Director of Photography on the panel were 3D Camera Systems Workflow Engineer (and Local 600 Operator member) Robin Charters, Sony VENICE Product Director Simon Marsh, and EFILM Colorist Tashi Trieu. I walked in during a breakdown of a shot where Spider (Jack Champion) has to run with members of the Na’vi tribe. Carpenter was talking about his crew’s use of the TechnoDolly, into which data was programmed to mirror director James Cameron’s virtual camera.

“Jim can operate the virtual camera handheld,” Carpenter explained, “so we’d go through this fusion period at the beginning of each take. We’d line-up the virtual camera with a marker for our live-action camera – height, angle and lens, all the same. As the computers would then know where everything was, the tables sort of turned and our live-action camera could pan around like Jim’s virtual camera – in this example, it was handheld – while our Simulcam system immediately gave us the background and foreground information.

“The Simulcam works with depth sensors,” Carpenter continued. “It knows Spider is five feet away, the Na’vi is seven feet away, and it will look like he is passing behind him. If there’s someone three feet away in the foreground, they can pass in front of [Spider] in real-time and you get this incredible real-time composite. That allowed us to know if our lighting was working and whether our live-action actor blended seamlessly into this virtual environment.”

One captivating aspect of the Avatar presentation was Carpenter presenting footage from the movie in various formats. In the Westin Mission Hills ballroom’s state-of-the-art theater, attendees saw 2K, 4K, 24 fps, 48 fps (per eye), 2D and 3D, projected at a bright 14-foot lamberts. Insisting that “we could only create such precise surgical-like lighting on set due to the incredible image quality of the Sony VENICE’s high ISO,” Carpenter later broke down a scene where Spider and the Na’vis plunge deep under the ocean’s surface. “We shot in a large tank using a machine to generate motion to alter the character of the water,” he noted. “The interaction between Spider and the other Na’vis is a game-changer. Hats off to WETA [the New Zealand-based Digital VFX company founded by Peter Jackson], as they spent years researching how to create realistic water for this scenario. The area around the island, meaning the real water, was only extended about five feet and the rest was generated by WETA, and masterfully so.”

Zell, who recalled visiting the massive water-tank stage in Manhattan Beach, CA to calibrate the on-set Sony X300 monitors to the same specifications as the monitors at EFILM, where Avatar would be graded, asked Trieu how early he came onto the project. “It was in 2018 when Russell and Robin first came in with camera tests to compare different colors and their retention (or lack thereof) of polarization through the beam splitter,” Trieu explained. “The amount of detail was incredibly impressive, and that’s because of the years of runway they had leading into production. When you compare the live-action photography in Avatar, released in 2009, to The Way of Water, it’s a generational improvement. There was so much in the first movie that was good but still had technical challenges, specifically polarization of artifacts through the beam splitter. All of that was massaged so carefully in the second movie that it provides a unique and superior 3D experience to anything else that’s been produced.”

When asked by Zell if the 3D capture rigs needed to be modified to shoot underwater, Charters laughed and said, “Everything on this film was modified. There was technology that came from the first Avatar, and many other films before Way of Water that all served as proving grounds – Life of Pi on through to The Jungle Book and Alita: Battle Angel.

“We selected new mirrors, which meant approaching high-end aerospace companies that are doing electro-deposits on glass,” Charters continued. “We thought we’d only make one underwater rig, but realized pretty quickly that because of the viewing angles we needed and the physical space where the cameras had to be placed, we needed three. One had ‘wet’ Nikonos lenses, designed for underwater photography which also allowed the mirror to be in the water; the waterproof lenses and attached electronics were made into separate camera body housings. We also made a nano-camera that was mounted into a little housing called the ‘Dream Chip,’ with Fujinon C-mount lenses. It was a side-by-side rig that was quite small and used to film the actors inside the subs when the subs are flooding.”

Following Carpenter’s panel was one of the most stimulating sessions of the entire retreat – veteran industry reporter Carolyn Giardina speaking live with Way of Water Executive Producer Jon Landau via a remote connection on the big screen. Landau talked about traveling from New Zealand, at the height of the COVID lockdown, to visit theater exhibitors around the world to showcase in-production footage from The Way of Water.

“It was my own little CinemaCon, where we had the top domestic distributors gathered together, and then that same community in Europe,” Landau smiles. “What we do is a partnership with the exhibition community, and I wanted to ensure their infrastructure could support where we wanted to take this second Avatar. Our goal with this movie was to change what people say when they leave the theater, from ‘I saw a movie’ to ‘I experienced a movie.’ The exhibitors are such a huge partner in that. If they can provide a quality experience from the moment you buy tickets, and supply content, not just for tentpoles movies like Avatar but for all kinds of movies, for 52 weeks a year, then we will keep the movie business alive.”

When Giardina asked the producer why they chose to incorporate 48-frames-per-second photography with 3D capture, Landau noted that “the two formats go hand-in-hand. We see the world in 3D, but we don’t [see] some of the artifacts of projection like a shutter and strobing. To create this truly engaging experience, we asked ourselves, ‘How do we get rid of the artificiality that’s a result of technological limits from another time?’ Twenty-four frames per second was created as a standard because the studios were cheap, and it was the slowest frame rate they could use at the time of talkies, where sound would not distort. It had nothing to do with the visual cortex.”

Landau explained how “ten years ago, Russell Carpenter shot a series of 3D scenes at different frame rates, and coming out of those tests, we felt 48 was the prime spot for certain shots, but not everything,” he added. “For example, we chose to do all of the underwater sequences at a higher frame rate, and selectively use it for action sequences. We thought some action scenes, with handheld camera, for example, would benefit more from that kinetic feel we’re all so used to seeing at 24 frames per second.”

When Giardina asked about new tools pioneered on Way of Water, Landau pointed to the use of real-time depth compositing as something that will benefit the entire industry. “Not every film will need underwater motion capture or facial capture,” he observed. “But so many movies today do add in elements, whether that’s against blue or green screen or whatever.”

Elaborating on the evolution of the Simulcam system created for the first Avatar, Landau noted that “the original Simulcam system fed images that were not there to the eyepiece of the live-action camera, meaning the images the on-set green screen would later be replaced with. It was at a low-res level, but it allowed the operator a much more accurate approach to composition and took out the guesswork. It was what I called ‘the weatherman effect,’ as it was able to put a person or object in front of the green screen on a single plane.

“For the sequel,” he added, “we had much more live-action/CG interaction in a given scene, so we challenged WETA FX to create what we called ‘real-time depth compositing.’ Looking through the lens, the Simulcam system would now determine where the characters were in 3D space, where the human objects were on the set in 3D space, and where in 3D space the CG elements were. It would do a real-time composite with characters walking behind or in front of virtual elements, which allowed Jim and his operators to shoot as if everything was present on the set. Downstream, the editors now had composited shots with CG characters [moving freely through the scene] to start making editorial choices. They didn’t have to do some kind of ‘post-vis,’ waiting days or even weeks for VFX shots to be put into the editorial workflow. We then could turn over those temporary composites to WETA FX so they would know exactly how everything lined up.”

Lining things up also factored into several illuminating panels during the Tech Retreat’s Wednesday and Thursday sessions. Those included a presentation from Dr. Tamara Seybold, technical lead of the Image Science team in ARRI’s R&D department in Munich, and her colleague, Carola Mayr, ARRI image science engineer, about the “Textures” feature in the ALEXA 35. The ALEXA 35, introduced in 2022, with a Super35 sensor and 4K resolution, had its image processing capabilities redesigned to include two features – Reveal Color Science (discussed at HPA 2022) and ARRI Textures, billed as a new path to creative control.

Seybold explained that Textures was born after visiting a cinema conference in Oslo, Norway, “where we asked all the creatives what they wanted to improve with the ALEXA’s image quality, and they said, ‘Everything looks great, but everything looks the same.’ Basically, that meant these DP’s wanted more control over the image. So, we thought: ‘What is used to create the look of an image?’ We started with traditional in-camera approaches like optical filters, vintage lenses, a Pro-Mist filter, setting the basic exposure, and color, of course.”

Before releasing Textures, ARRI cameras came preinstalled with one texture setting. “That meant we optimized all the image processing for the widest range of shooting scenarios we could think of,” Seybold shared. “With the ALEXA 35 we still have one default texture setting preinstalled in the camera, but we now also have different texture curves with different characteristics. Technically, Textures is one file where all the image processing parameters are stored, and you can select them on the menu or use a file that you have and load them via USB stick on the camera.”

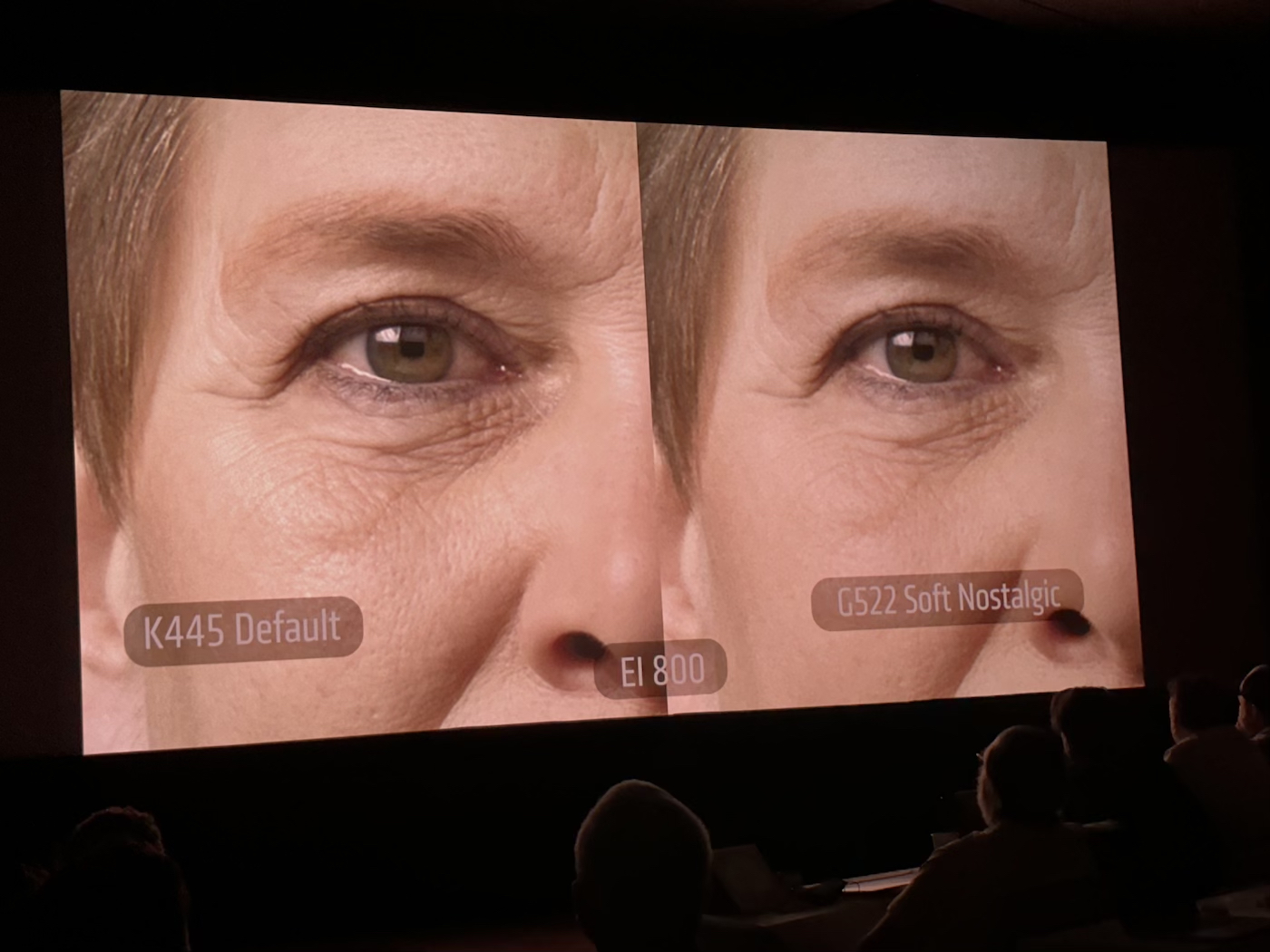

Seybold said one important aspect of Textures is that “they are baked into Pro-Res LogC and ARRI RAW. We did this out of technical necessity, as some part of the processing had to be done in camera,” she described. “The Textures are a subtle way of adding to what’s there; they don’t replace more prominent approaches like vintage lenses or a film grain emulation in color grading.” Later, Mayr presented side-by-side examples of Textures that included footage captured at exposure indexes ranging from ISO 800 to 3200. The left side of the side-by-side was the camera’s single default texture, and the right side was one of the new-release Textures.

“The Cosmetic Texture is recommended for rendering skin tones,” Mayr shared. “It’s designed to be softer with lower contrast that makes the skin look a bit smoother but maintains sharpness in the eyes. The Soft Nostalgic Texture is grainy with a vintage feel that is softer than the default texture. Our most popular Texture is the Nostalgic, designed to register a lot of unsaturated grain as well as lower contrast to emphasize a nostalgic film emulation.”

One highlight from the Tech Retreat’s second day was a MovieLabs 2030 Vision Update panel with a who’s who of industry technologists. They included MovieLabs CTO Jim Helman; Annie Chang, VP creative technologies, Universal Pictures [ICG Magazine April 2023]; Shadi Almassizadeh, VP motion picture architecture and engineering with The Walt Disney Studios; Kevin Towes, director of product management for video at Adobe; Toby Scales, media and entertainment lead office of the CISO, Google Cloud; Mathieu Mazerolle, director of product for new technology at Foundry; and Katrina King, global strategy lead for content production with Amazon Web Services (AWS).

Acting as moderator, Helman broke the presentation up into three areas: progress, security and interoperability, the last being emphasized as key to getting new digital workflows up into the cloud while still maximizing flexibilities for users.

King kicked things off with her thoughts on the progression toward 2030. “If you had asked me a year ago to talk about a production that was run entirely in the cloud, I probably would have been stumped,” she announced. “But a few months ago, I had the pleasure of speaking with [representatives from] Company 3 about producing The Lord of Rings: The Rings of Power in the cloud, so we are finally seeing inspiring examples of shows trying to holistically migrate to the cloud. The next day we heard from Jon Landau and Connelly about how Avatar [The Way of Water] was rendered in the cloud, using 3.3 billion core hours – with a b! I don’t want to manage that data center,” King laughed. “But there’s clearly a strong move toward building out end-to-end, holistic cloud-based workflows. And we see our role in that process as filling in gaps – for example, a gap analysis for color in the cloud.”

Of the Amazon-produced Rings of Power cloud workflow, Chang added that “talking to Company 3 and seeing this first-time example of having camera through finishing in the cloud was impressive and surprising,” she noted. “I always thought grading and finishing would be the last thing we’d do in the cloud, and it’s become one of the first things accomplished on this journey toward the 2030 Vision.”

When Helman asked Almassizadeh about moving from traditional “on-prem” to the cloud, the Disney technologist said, “If I could call one mulligan, three years into this 2030 journey, I wish we used the term ‘virtualization,’ not ‘cloud.’ The principles that the 2020 paper defined were about virtualizing a process that was historically labor-intensive, manual and completely four-walled. What we’re excited about is that the technology is catching up with how people want to work – whether that’s virtually replicating the NFS storage environment, which historically depended upon on-prem, or the containerization of the applications we’re working on, so they can be native to next-generation workflows.”

Interoperability was a big part of the MovieLabs 2023 panel, as Helman spoke about the group’s efforts in areas like data models and ontology. “MovieLabs helped develop this ontology for media creation that helped connect workflows back to things in the script, and Annie Chang has a clip to help illustrate these concepts.” Chang set up the video by noting that “there’s a common language we have when making films and television shows, and there should be a better way to associate things like scripts, schedules and the metadata. We want to create this backbone that you can attach an asset management system or other databases to and more easily associate those assets to that backbone of information. Once we have that backboned, we can search across the many different elements in a production. We hired [Production Cloud Technology Expert] Jeremy Stapleton, who built us a Neo4j database that contains content from Fast & Furious 8. Jeremy made this super-neat video that explains the process, which I can show to you all now.”

The video Stapleton produced broke down Universal Pictures’ goal concerning “creative intent” on the hit action franchise movie. The approach begins with the screenplay and tracks all assets for the film through ontology. Using database management software Neo4j, Stapleton showed how all the narrative elements related to a given scene could be associated with things like the production schedule, locations, performers on that day, and slate metadata from the physical shoot, as well as VFX pulls and VFX comps that were finalized into the film.

“By integrating with the ontology, software solutions can support creative teams to be more efficient by finding the information they need without duplicating metadata across multiple systems,” Stapleton explained in the video. “In cooperation with Microsoft, Universal is building an asset management solution asset flow to leverage our ontology data and help our users find and retrieve assets at any stage of the production lifecycle. Out of the hundreds of thousands of assets generated in preproduction and production, we can filter down what assets relate to Vin Diesel, a Dodge Charger, and the production location in Atlanta, and deliver all the elements related to this for the VFX work to begin.”

Wednesday’s afternoon session also included a panel on a “Web3 Movie Experience” for The Lord of the Rings: The Rings of Power. Led by Mark Nakano, vice president of content security at WarnerMedia; along with Jason Steinberg, managing partner and founder of Pretty Big Monster, a digital marketing agency focused on AR, VR, XR and immersive digital marketing; and Michelle Munson, co-founder and CEO of Eluvio, Inc., creator of the Content Blockchain network and Content Fabric Protocol, panelists outlined the technical and content production details used to create a first-of-a-kind Web3-native movie experience that was sold to fans on the blockchain (a decentralized, distributed and public digital ledger that is used to record transactions across many computers). The immersive experience included access to the full 4K UHD film, discovering embedded WebXR objects, and enjoying images, special audio commentary and hours of exclusive content.

Other panels late in Wednesday’s session included one titled “Remote, Mobile, and Live Workflow Innovation Updates.” Mark Chiolis, director of business development for Mobile TV Group (MTVG) and an HPA board member and ASC associate member, discussed the latest innovations in live sports and live event productions with fellow industry veterans Scott Rothenberg, senior vice president of program and planning for NEP; Nick Garvin, chief operating officer and managing partner for MTVG; and Gary D. Schneider, lead media systems architect at LinkedIn. The conversation was cloud-dominated, but also hit on home workflow solutions and how cinema methodologies have impacted live event capture.

Schneider spoke about LinkedIn’s “multi-site strategy” and the use of new technology across various facilities. “What’s important for us is that remote workflows and interoperability drove the design of each facility. Teams will serve different productions around the world from one location so that the network in the middle is the key to providing REMI [Remote Integration Model] streams for those productions. A recent example was a production out of our New York studio with the control room in Sunnyvale, CA. We had a host and guest in New York and a guest that was brought into the San Francisco studio. Given the schedule of the production team, they had to be in Mountain View, where the show was cut and finished. We’re also trying to extend our on-prem infrastructure into the cloud by leveraging TR-07 [codec] to get streams into a cloud environment for both file encoding for records as well as some production tools that would live in the cloud.”

Rothenberg outlined the changing ecosystem in live production. “We have traditional workflows, blended onsite and remote fully blended, and then fully remote with distributed workflows,” he explained. “The big takeaway is that our clients are looking for more flexibility and efficiency in live production, to enable more creativity [in capture and storytelling] as well as more complete end-to-end solutions with fewer touchpoints,” he added. “Security is also a growing concern, as a single device could flood an entire network. And when we talk about ‘the network,’ it’s actually many, many small networks that all come together that need to work seamlessly. But we have disparate systems, standards that have varying degrees of interpretation, and a lack of skilled talent and training.”

Garvin introduced new workflows MTVG has added in 2022/23. “We recently completed our 51st mobile unit, and it’s a 1080P HDR system that’s fully IP and resides in the L.A. area,” he said. “We also completed the build of 25 cloud control rooms spread out around the country that remote directly into a mobile unit, as well as private/public/hybrid cloud workflows. We launched a new division called our Edge Series, which is a software-defined production system for small- to mid-tier events. It’s been a big success for those who don’t have the budget but can still use all the on-site computer power, which is, I suppose, in contrast to using the cloud, when it’s just unnecessary. That launched with the APP Pickleball League, which was very fun to watch!”

HPA’s final day of the retreat was centered around cloud and virtual production, with surprising viewpoints popping up in both hot-button areas. Moderated by Chris Lennon, Ross Video’s office of the CTO as director, standards strategy, “Does Everything Really Belong in the Cloud?” was a lively panel that offered up some resistance to the inevitable march toward all things cloud-based. Panelists included Thomas Burns, Dell Technologies CTO for media & entertainment; Renard Jenkins, SMPTE president and former Warner Bros. Discovery’s senior vice president, Production Integration & Creative Technology Services; and Evan Statton, chief technologist in media and entertainment, AWS.

When Lennon asked the eponymous question, Statton responded, “In time, yes. But there are some things that can’t go to the cloud – physical or virtual sets, unless you’re creating an entire movie that’s just rendered, and there are some of those, which probably do belong in the cloud. But there will always be a need for physical stuff on the edge. I will add that I’m glad we’re having this conversation. Ten years ago, we were talking about: ‘Will this even work?’ and I think we’ve spent a few days convincing ourselves that, yeah, this stuff is real and works. So, now comes the exciting part and optimizing it, asking: ‘Where do we want to use this technology?’”

Burns added that “we’ve been talking about interoperability throughout this retreat, and to drill down into that concept, I would posit that what we need is a unified control plane that allows artists and operators to not even care where the actual resources they’re using are. We’ve been stuck in that classic hybrid workflow we call ‘rock, pebbles, sand,’ where the rocks are your fully capitalized on-prem assets, that hopefully you’re running at 99 percent efficiency; the pebbles are the project-based resources that are not part of your baseload; and the pure sand is the public hyper-scalers, which need those resources in the last two months of the project just to finish. Unfortunately, the interoperability between the rocks, pebbles and sand just isn’t there yet. And we’ve talked about API [Application Programming Interface]-driven workflow orchestration as a way to get to that unified control plane at some point.”

Lennon agreed, adding that a unified control plane “can’t come from a vendor or someone with an agenda. It needs to come from a neutral party – someone like an SMPTE,” he smiled. “So, I’m going to ask Renard to put his SMPTE hat on and talk about this question from that perspective.” SMPTE’s current President Jenkins also has a deep background in overseeing media-supply chains and launching live-streaming apps and cloud-based content. He observed that “there is certainly a need for dynamically active, smart microservices as a part of that universal control plane. But to get there, you need to have standards that will allow for interoperability. I do believe SMPTE could have an impact by looking at what benefits are coming from the open-source world, and where we can create standards that will push interoperability forward. Once that happens we need to move at a rapid pace – the key word being ‘rapid’ – to have vendors and users on board and push for adoption of those standards.”

Jenkins noted that “last year we were all at HPA saying there was no way anyone was going to do color in the cloud. And we’ve seen examples this year of getting really close and some success stories to share. All of us in the production space know that you can standardize certain things; I can build you a fly kit that will go on every production. But when you get there, I then have to create a bespoke solution for that to work. Using other people’s servers is the same thing: each time I’ll have to develop a bespoke solution based on what parts of the toolkit I’m pulling into it. If you add on top of that standards that help me put those puzzle [pieces] together, and I can be assured that if I follow those standards it’s going to work, the answer gets closer to, eventually, yes – everything belongs in the cloud.”

Following the cloud discussion, Disguise’s Addy Ghani moderated a panel titled “Real-World Production Tips for Implementing Virtual Production.” Panelists included Ely Stacy, Disguise’s technical solutions lead; Mike Smith, technical director at ROE Visual US; and Henrique “Koby” Kobylko, director of on-set virtual production for Fuse. Ghani, VP of virtual production at Disguise, began with a “shout-out to our smaller VP community, many of whom are here [as attendees]. It’s a close-knit community that’s always talking to each other, and that’s a big reason why the industry has matured and progressed so quickly.”

Ghani then asked each panelist to highlight their company’s place in the virtual production landscape, with Koby noting that “Fuse does many different things, but I’m going to focus on film and TV. We’ve built a lot of VP stages for that part of the industry. We built the stage for The Mandalorian, which helped create the boon for the VP community. Following that show’s success, we continued to build all of the stages for Industrial Light & Magic. Another interesting one was the Amazon VP stage in Culver City.” Koby added that the Fuse team “recently built a big water tank and LED stage behind it for Apple TV+’s Emancipation, and we own and operate our own stages as well.”

Smith shared that ROE Visual is a global LED manufacturer, which deploys technology worldwide, including many of the virtual production stages that have popped up in the last five years. “Traditionally we’ve done live event work, and while we still do a lot of that, virtual production has become our main focus.” Stacy explained how Disguise’s platform allows for a variety of live productions, “fixed install being our background leading into virtual production, extended reality, augmented reality, broadcast, a lot of different applications using real-time rendering and tracking and many more integrations through one platform.”

Ghani pointed out that “while this panel is centered on in-camera VFX, VP is really an umbrella term for many different creative tools. The tool sets we’ll cover today are how vehicle process is done, what a 2.5D workflow is and why we need it, Unreal Engine assets and when we use them, and the newcomer – multi-frame content, also known as ‘ghost frame.’” He then asked Koby to break down the priorities on set for a virtual production shoot. “The first conversation is before we get to set and what are the challenges for that specific production – what is the camera tracking solution?” the VFX Supervisor outlined. “What is the rendering solution? Are we using 2D plates or real-time content? Are we using in-camera VFX with LED walls? Before the shoot date, we’ve gone through some of these workflows with the DP, with a pre-light date and the content being QC’d so no time is wasted on stage. While the technology does allow us to make changes on the fly, we’ve found the more pre-planning, the better.”

The panelists all admitted VP’s tech-heavy workflow can present challenges on set. “You can be spending $100,000 a minute just waiting for things to be fixed on-set,” Ghani explained. “We want to do as much of the homework as we can beforehand, and that includes things users might not think about – lens calibrations, color calibrations, and changing content on the fly.” Stacy then walked attendees through a vehicle process shoot using VP. “This is a super-common use case for LED volumes, with cars driving through different environments. The quality of the asset can vary wildly, so some of the things to consider are: will we get a 360-degree video or 180 degrees – front, back or side? Previs for all the different angles required is critical in this use case. 360 has a lot of advantages as you can shoot in any direction. However, these are shot on camera arrays, which are located in different positions, and stitching them together can be challenging if the assets are delivered as individual plates. You either need to stitch them together in preproduction or live on set. Personally, as an operator, I prefer the assets to be pre-stitched as a single file without the car, 8K by 4K, which makes mapping that content to the many LED surfaces often found in a volume much easier.”

HPA also devoted a full day to the programming and technology innovations impacting sports broadcasting, continuing a trend that started a few years ago. Sports producers are increasingly turning to larger format theatrical cameras to capture interstitial material that brings audiences deeper into the behind-the-scenes stories of the game. They cited the larger format’s shallower depth of field, selective focus, and the mobile capabilities of Steadicam, and stabilized systems like the Mōvi as enabling a visual narrative that stands in contrast to the look of the traditional broadcast cameras used for game coverage. Producers also noted the lack of sports crews having experience with narrative shooting styles and the need to expand their crewing options to include camera crew members with narrative and unscripted experience. There are concerns about the communications barriers presented by bringing non-sports camera crews into live game production, but there will be work opportunities for those who learn to make the jump.

A second theme in live sports was the increasing capability of using internet connectivity to facilitate remote centralized control rooms. This opens the possibility of Video Controllers working remotely instead of traveling with the teams. The capacity to begin production trials with remote control rooms for live productions is based on recent advances in low latency IP (internet protocol) video transmission and switching gear as well as the installation of massive data pipes into the venues.

ICG’s presence at NAB 2023 included sponsoring two panels and throwing out a wide net to our many vendor partners, who returned to Las Vegas in numbers nearly comparable to pre-COVID years at more than 1,200 exhibiting companies. This was NAB’s centennial (100-year) show, and while overall attendance was still feeling some of the blues from a three-year-long pandemic – at roughly 65,000 registered attendees – there was no lack of leading-edge gear and IP scattered throughout the show’s two main halls.

For ICG staffers in attendance (which included ICG Magazine Publisher Teresa Munoz, Business Representative and Technology Specialist Michael Chambliss, Communications Coordinator Tyler Bordeaux, and New Mexico-based Unit Still Photographer Karen Kuehn), NAB officially kicked off Sunday night with a visit to two key vendor-partner events. The first, hosted by Burbank-based Band Pro Film & Digital Inc. at their NAB floor booth, was led by Band Pro Marketing Director Brett Gillespie and industry technology veteran Tim Smith (formerly with Canon), who both spoke at length about industry trends in cameras and lenses, including lens additions in vendor lines represented by Band Pro, specifically vintage lenses being re-housed to accommodate modern high-resolution digital sensors and their impact on Local 600 focus pullers, DP’s, and operators. The second, hosted by software giant Adobe on the Encore Hotel’s patio, featured tech reps from the San Jose-based company detailing recent advances in virtual production, as well as trends in AI/Cloud workflows.

On Monday, the first full weekday of NAB, ICG Rep. Chambliss moderated a panel entitled “Hitting 2023 Sustainability Goals from Prep to Wrap,” which featured ECA Award-winning Director of Photography Cynthia Pusheck, ASC; Zena Harris, president of Green Spark; Senior Director of Sustainability for MBS Studios Amit Jain; and Koerner Camera Systems Rental Manager Sally Spaderna. The panel was co-sponsored by PERG (Professional Equipment Rental Group), which has worked with ICG’s Sustainability Committee to create “green” guidelines for camera rental houses.

Chambliss kicked off the panel by noting that “sustainability in the film and TV industry is a big, complex subject that’s comprised of, maybe, thousands of smaller pieces. We’re going to start big picture and then dig down to hear from the panelists about individual efforts being made to reach a goal of 50 percent carbon emissions reduction by 2030.” Chambliss pulled up a PowerPoint slide that Harris explained was derived in part from a report done by the Sustainable Production Alliance (SPA), a group of 13 studios and streamers who collectively aggregated data a few years back. “Across all productions,” Jain jumped in, “the major takeaway is fuel. That’s a direct emission to the atmosphere, in places like fleet and generators.” Harris then asked, “What does 3,370 metric tons of carbon dioxide really mean? It’s something like 750 gas cars driving for a year, circling the earth 347 times. It’s a stunning comparison and provides some idea of how critical this effort is from every production department to avoid catastrophic climate change.”

Pusheck noted that she was lucky to work on a show where she first heard about Harris’ company, Green Spark. “It’s very top down, and we were lucky to have a producer who really cared about this and challenged each department to reduce fuel. We had to tell our camera team: ‘We can’t do rushes to get equipment. We’re going to wait and do three or four runs. There were a lot of small, subtle ways, and every department was keyed in to how do you reduce fuel? Like my asking production for a green car and encouraging the crew to bike to set. It was great. But not every show is that committed.” Spaderna provided the rental house perspective, adding that “we need to encourage productions to build in these sustainable offsets into their budget lines. With fuel and travel being two of the heaviest hitters, if you are shooting in a smaller market, look into renting from local vendors who serve those markets rather than trucking or shipping/flying in all of your equipment. It can be a major cost offset and we are trying to convey that message to productions.”

“Power and transportation are clearly the major components to reducing emissions,” Chambliss followed up. “And we can break that down into power generation and management, scaling power to lighting, digital scouting and local sourcing, which Sally just talked about.”

“With regard to power generation that’s done on location, or even at the studio lot if it’s an older facility that has not had its infrastructure upgraded,” Jain picked up, “is that if you have to use generators can you use renewable diesel? Is there a drop-in fuel that doesn’t require any retrofits? Last year, the U.K. had a subsidy making renewable the same cost as regular diesel and a lot of the productions shooting there were required to use renewable diesel. That’s the first step. The second would be trying to reduce generator use, or replacing them with electric generators. We have a fleet in Canada going up to 225-kilowatt hours. LED lighting will also help reduce consumption. Ultimately what we’re talking about is pre-planning to have the right amount of power. Losing power on a set can mean someone losing a job, but if we preplan and scale down into smaller electric systems, we can be targeted and successful.”

Harris added that “we want productions to have sustainability in mind when they set about planning their power needs. For example, is there an opportunity at the location you’re shooting to plug into the grid to get your power? That’s an easy way to get cleaner energy than firing up a diesel generator. A problem-solving ethic is not new to this industry, so opening up the dialogue about how to get a shot or scene in a more sustainable way will create a lot of opportunities for a cleaner industry.”

Monday at NAB also included a workshop with former Local 600 member Liz Cash on body mechanics for female camera operators. ICG’s July issue serves up more extensive coverage of Cash’s unique journey from Guild camera assistant to now head of her own company [Liz Cash Strength and Conditioning] aimed at extending the careers (and long-term health) of union camera operators. Briefly summarized, Cash’s workshop at the CineCentral Pavilion, in the rear of NAB’s Central Hall, included dozens of NAB attendees going through various strength, conditioning, and biomechanics tests at Cash’s direction. Cash’s targeted (and relatively simple) drills included stability versus mobility, a balance evaluation called Sharpened Romberg, which tests the vestibular and proprioceptive (balance) systems, and a 360-degree diaphragmatic breathing assessment. Attendees also put on various camera support rigs that Cash and camera-support technicians adjusted, evaluated, and provided tips about regarding posture, weight distribution, back support, etc.

The day concluded with an ICG-sponsored party at Cine Central, where Green Spark’s Zena Harris described various use cases of Guild members attempting to green-up sets. Guild DIT’s Dane Brehm, Johanna Salo, and Pete Aguirre also talked about how the DIT craft can/will evolve given recent trends in virtual production. All three Local 600 members were part of a Tuesday morning presentation, sponsored by ICG, in the CineCentral Pavilion entitled “Mix Your Secret Sauce with DITs” which was moderated by ICG Technology Specialist Chambliss. Brehm and Salo spoke to a group of production professionals ranging from producers and DPs to aspiring DITs and camera crew members about the problems DITs solve and how the efficiencies they bring help productions make the day. The two DITs broke their work down into pre-production, camera prep, on-set work, and postproduction, mixed in with a lively Q&A from attendees on topics in each area. Being part artist, part technician, and part workflow specialist, Brehm and Salo explained how they integrate the creative voices of the director and DP, the camera department’s technical requirements, and production’s need for reference images and dailies into the technical requirements of studios, editorial, VFX and post.

Brehm was also a presenter at the University of Southern California’s Entertainment Technology Center (ETC) booth shared with Hewlett-Packard, where he outlined his and Salo’s role in the ETC-produced virtual production/cloud computing short film Fathead. Brehm, who says most of the attendees he talked with at NAB were working professionals, discussed “Fathead’s workflow methods and technologies for us around VP, cloud, and how technicians adapt to the VP landscape in regard to color, lighting, lensing, and using Unreal Engine along the Amazon Studios Virtual Production team.”

In ETC’s white paper, the authors note Fathead centers on a “fictional junkyard where unsupervised children roam free. Most of the cast were children, and only one adult actor was featured in the script. The combination of needing to be in a hazardous environment with children and many of the sequences taking place at night naturally benefited from shooting in an LED volume, where any time of day or night could be achieved with the click of a button, and the perils were averted by manufacturing the environments digitally.”

As to the current state of ICG’s DIT, loader, and utility positions, Brehm says, “Excluding the current WGA strike, it’s been very strong across many states, especially in smaller markets like Michigan, Kentucky, Nashville, South Carolina, and New Mexico,” he shares. “The DIT position is constantly challenged by new camera systems, like the ALEXA 35, Big Sky, and the RED Connect system. These systems allow greater freedoms for DITs to help execute the vision of the cinematographer while optimizing color space, resolution, and the data pipelines that productions require.”

Other highlights from Tuesday’s NAB session included representatives from Blackmagic Design showcasing the company’s new 12K Ursa digital cinema camera and its use on recent union productions, as well as additions to their CineTel film scanner and a potential resurgence in shooting film by Local 600 members. Main Stage panels were led by a seminar on Immersive Storytelling (VR/AR/XR) and how ongoing trends will impact Local 600 membership. Product demos included a visit to the ASUS booth and a rep from CalMan software discussing monitor calibration, relative to Local 600 DIT’s work on-set.

Mo-Sys ran a demonstration at the NAB Show of how several technologies can work together to solve tricky production problems. Using a smallish 12-foot LED wall, a turntable, and programmed LED lighting, they executed the typically difficult-to-light 360-degree shot with the camera on a tripod and the talent standing on the turntable while the computer changed the background behind them with the LED lighting programmed to appropriately follow the move. The point made was the virtual production techniques can be applied at smaller scales, too. A variety of companies showed approaches to porting virtual environment technology that was originally developed for newsrooms and talk shows into theatrical applications. Other products of note included Atmos camera-to-cloud proxies, a variety of ergonomic camera support systems, and an explosion of new lens manufacturers.